At least one politician wants more transparency in the wake of an AI-generated attack ad. New York Democrat House Representative Yvette Clarke has introduced a bill, the REAL Political Ads Act, that would require political ads to disclose the use of generative AI through conspicuous audio or text. The amendment to the Federal Election Campaign Act would also have the Federal Election Commission (FEC) create regulations to enforce this, although the measure would take effect January 1st, 2024 regardless of whether or not rules are in place.

The proposed law would help fight misinformation. Clarke characterizes this as an urgent matter ahead of the 2024 election — generative AI can “manipulate and deceive people on a large scale,” the representative says. She believes unchecked use could have a “devastating” effect on elections and national security, and that laws haven’t kept up with the technology.

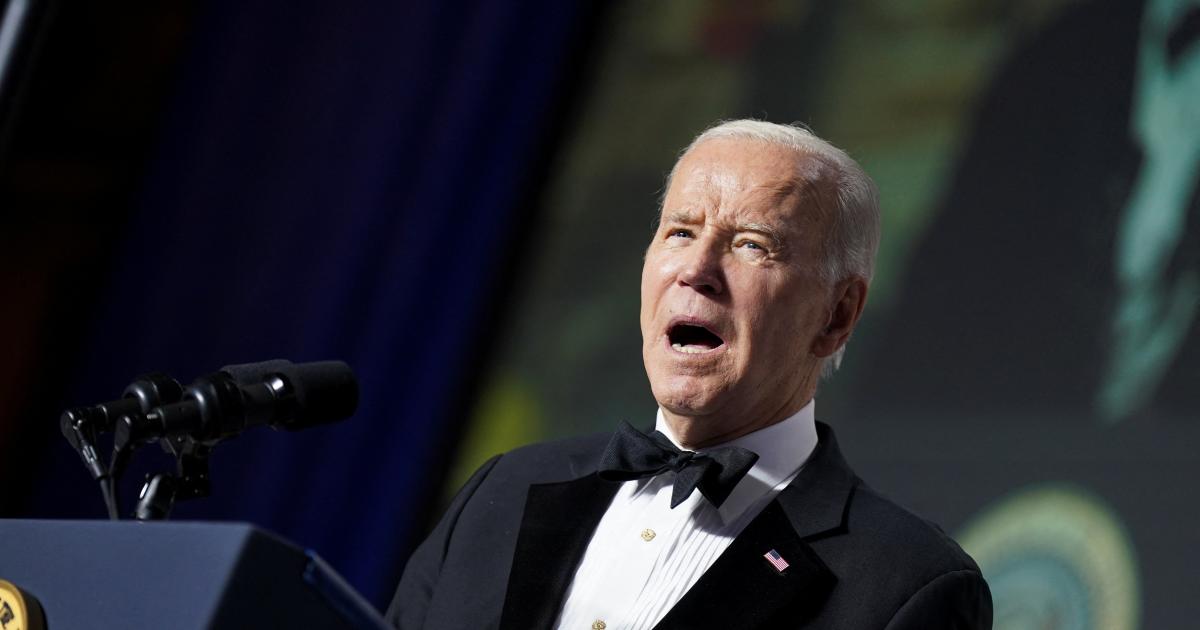

The bill comes just days after Republicans used AI-generated visuals in a political ad speculating what might happen during a second term for President Biden. The ad does include a faint disclaimer that it’s “built entirely with AI imagery,” but there’s a concern that future advertisers might skip disclaimers entirely or lie about past events.

Politicians already hope to regulate AI. California’s Rep. Ted Lieu put forward a measure that would regulate AI use on a broader scale, while the National Telecoms and Information Administration (NTIA) is asking for public input on potential AI accountability rules. Clarke’s bill is more targeted and clearly meant to pass quickly.

Whether or not it does isn’t certain. The act has to pass a vote in a Republican-led House, and the Senate jsd to develop and pass an equivalent bill before the two bodies of Congress reconcile their work and send a law to the President’s desk. Success also won’t prevent unofficial attempts to fool voters. Still, this might discourage politicians and action committees from using AI to fool voters.